The following is a preliminary analysis of planning faculty listed in the Guide to Undergraduate and Graduate Education in Urban and Regional Planning (17th edition, dated 2011) published by the Association of Collegiate Schools of Planning. I queried Google Scholar for publication/citation data for each of the 850+ regular faculty listed in the guide (the primary tool used was Harzing’s Publish or Perish; see http://www.harzing.com/pop.htm). Because of name disambiguation, misspellings, etc., the resulting data from these searches required extensive cleaning to match authors with their publications. The original dataset consisted of over 62,000 records (publications indexed by Google Scholar [GS]) associated with approximately 975,000 citations. Automated and manual processes to clean the data for planning faculty reduced these numbers to 24,609 publications with 634,467 citations. This process is still underway, and I suspect the data are at least 85–90% clean at this point. Again, these data are preliminary but are reasonable estimates at this point. Following a brief discussion about academic citations are summary results from the initial analysis.

Citations

The traditional means of assessing academic productivity and reputation is citation analysis. Citation analysis for scholarly evaluation has an extensive literature that weighs appropriateness within and across disciplines as well as offering nuanced discussion of metrics (see, e.g., Adam, 2002; Garfield, 1972; Garfield & Merton, 1979; MacRoberts & MacRoberts, 1989, 1996; Moed, 2005). Recently, popular metrics like the h-index, g-index, and e-index have been adopted by GS to provide Web-based citation analysis previously limited to proprietary citation indexes like Thomson Reuters (formerly ISI) Web of Knowledge (WoK) and Sciverse Scopus. This is the likely trajectory of citation analysis as open access scholarship becomes more pervasive. There is some debate, however, that GS’s inclusion of gray literature citations means its analyses draw from a different universe of publications to assess citation frequency and lineage. Scholarly activity represents approximately one-third to one-half (or more) of faculty effort along with teaching and outreach/service activities. In many universities/disciplines, scholarly productivity and reputation are primary factors in deciding promotion and tenure cases.

Results

This initial analysis focused on the citation activity of planning faculty by (a) current school, (b) the school from which they received their PhD (or other terminal degree), (c) years as a professor (time since terminal degree was used as a proxy), (d) rank, and (e) individual faculty. The results are as of mid-November 2013 and will be continually refreshed with GS searches and manual data input and correction.

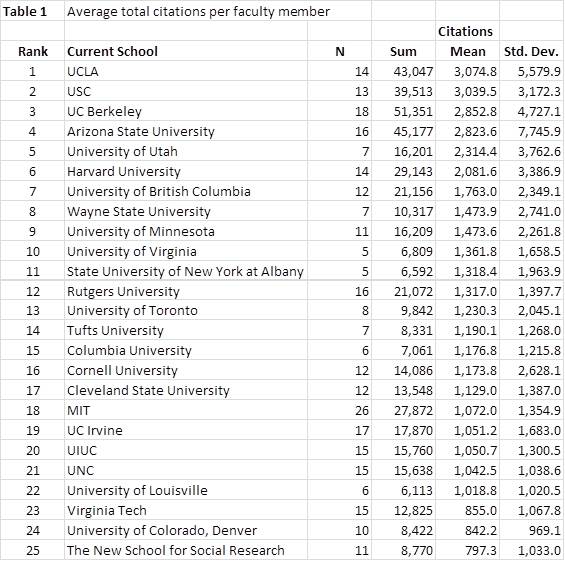

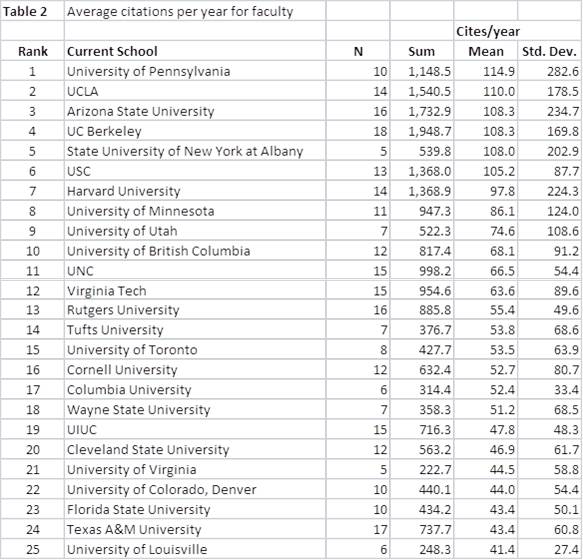

Table 1 list the top 25 planning schools based on the average number of GS citations per faculty member. Table 2 lists the top 25 in terms of citations per year of service (or year since degree) to account for faculty age or experience. On average, the work of the UCLA and USC planning faculty members has been cited over 3,000 times (averages are greatly influenced by particular faculty). In addition, planning faculty members at Penn, UCLA, ASU, Berkeley, SUNY Buffalo, and USC average over 100 citations per year.

Faculty Citations by School of Degree

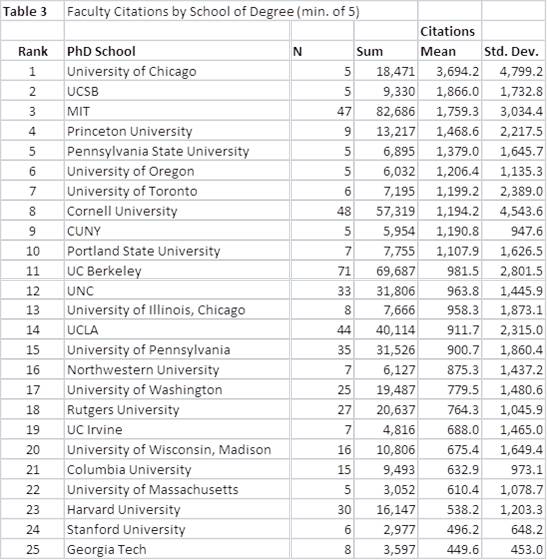

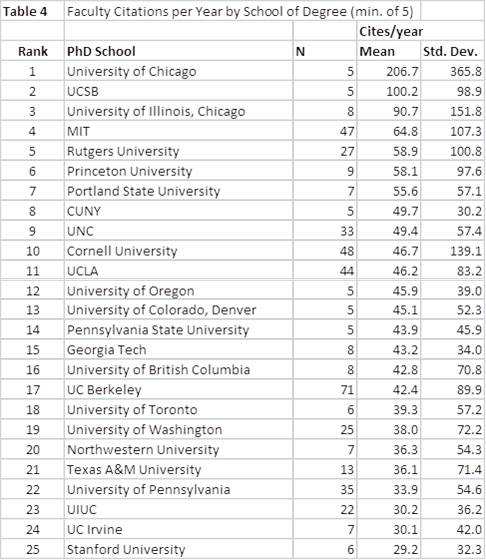

The citation data can also be compared by the school where each faculty member received their terminal degree (usually a PhD). Table 3 shows the top 25 universities (not necessarily planning degrees) in terms of average total citation output. Table 4 shows citations per year of experience. Graduates from the University of Chicago and UCSB have the highest levels of citation activity among planning faculty. Of note is that UCSB does not have a planning program.

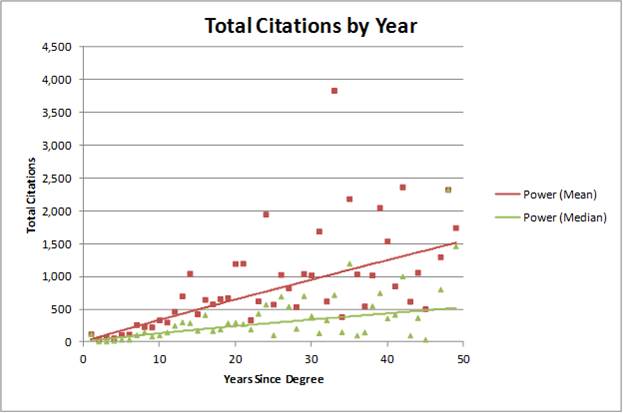

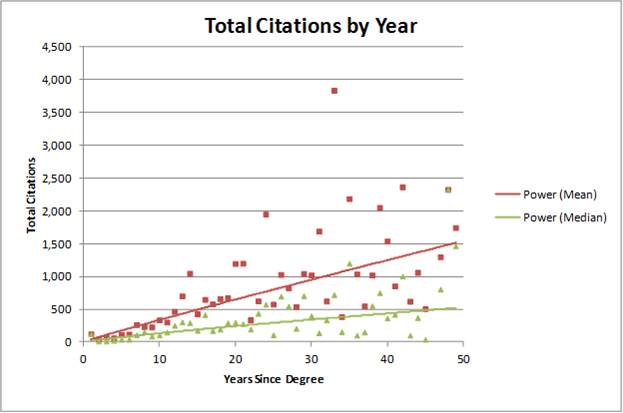

Although there is a significant amount of variation among individual planning faculty citations that effect department-level performance, there are distinct trends based on seniority. Figures 1 and 2 show both increasing mean and median citation totals by years of experience and rank. Currently, faculty with 5–6 years of experience (pre-tenure) average about 115 citations (median of 41), and those with 15–16 years of experience (around promotion to full professor) average over 500 citations (median of 300). However, these numbers do not control for types of institutions, teaching loads, and administrative activities. During the course of a career (30–50 years), faculty average between total 1,000 and 1,500 citations. In terms of those currently at the rank of assistant professor, the average is over 150, with associate professors averaging over 500 and full professors over 1,400.

Finally, there are many planning faculty with citation totals far exceeding the average levels discussed (the top 25 are shown in Table 5). All of the previous summary information for planning schools is based on GS citation totals for individual planning faculty. These totals will change over time as the data are corrected and updated as previously mentioned. The overall list of planning faculty is available here for download. Your help in updating these numbers will be greatly appreciated and will be used for an upcoming complete analysis. If your citations totals are very different from what is shown, please feel free to send me (tom.sanchez@vt.edu) your updated CV or links to your GS Citation profiles.

If you are interested in citation analysis and bibliometrics, you can visit my Mendeley Group at http://www.mendeley.com/groups/1318573/citation-analysis-bibliometrics-and-webometrics/ and my paper on the topic at http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2157789.

References

Adam, D. (2002). Citation analysis: The counting house. Nature, 415(6873), 726–729.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178(4060), 471–479.

Garfield, E. (1979). Citation indexing: Its theory and application in science, technology, and humanities. New York, NY: Wiley.

MacRoberts, M. H, & MacRoberts, B. R. (1989). Problems of citation analysis: A critical review. Journal of the American Society for Information Science, 40(5), 342–349.

MacRoberts, M. H, & MacRoberts, B. R . (1996). Problems of citation analysis. Scientometrics, 36(3), 435–444.

Moed, H. F. (2005). Citation analysis in research evaluation. Dordrecht, The Netherlands: Springer.

Pingback: Faculty Scholarly Productivity and Reputation in Planning: A Preliminary Citation Analysis | Transportationist

But is citation count a good muresae of whether a journal is actually read? I think not. The # of times an article has been read and the # of times an article has been cited are completely different muresaes. Might they be correlated in some way? Perhaps, but I don’t think the correlation would necessarily be that strong.People read articles, not journals. Virtually no one actually reads the print version of a journal (with some exceptions, like the Harvard Law Review). My impression is that most people seek out articles, usually by doing Westlaw/Lexis searches and then reading whatever comes up (and, of course, SSRN, though PR/marketing skills likely play a much larger role over there than they do with Westlaw/Lexis). Now, in Westlaw/Lexis individuals will obviously know the author’s identity and what journal published the piece, and may decide what articles to actually read based on that information. I can’t control my identity / name recognition / etc., but I can control the journal I publish in. So, if I want to maximize readership, I have a strong incentive to publish in journals with good reputations that people would be more likely to read.But are the journals with the best reputations the same as the journals with the best citation count? I say no. Several of the bloggers I linked to point out multiple instances where some of the most prestigious journals were ranked far below their perceived area of prestige in the citation count rankings. Given that so many people just don’t know that the East Dakota Law Review is cited more frequently than the George Washington Law Review, it’s not likely that I would gain any benefit from publishing in East Dakota LRev over GW LRev (and, in fact, I might lose readers, if a lot of readers skip over my piece because they believe East Dakota LRev isn’t prestigious enough and therefore its articles aren’t as good).And this isn’t even getting into the other problems with citation count as a muresae, like the fact that the high citation count could be due to one or two articles getting a huge amount of attention, or that certain types of articles don’t get as many citations as other types of articles for reasons that go way beyond quality (legal history is the classic example).Of course, this raises another issue — if you can’t make placement decisions based on citation count, what can you look to? I honestly don’t know the answer to that question. I’ve personally just looked to what other authors the journals have published, and also relied on word-of-mouth and anecdotes. Might not be the best way to do things, but I think relying on a subjective conception of prestige makes more sense than relying on an objective muresae that seems clearly flawed.

Pingback: 2015 Urban Planning Citation Analysis | Tom Sanchez

Pingback: Ranking North American Urban Planning Scholars Using Google Scholar Citation Profiles | Tom Sanchez

Pingback: 2020 Urban Planning Scholarly Citations: An Update | Tom Sanchez